Generative artificial intelligence is reshaping industries worldwide, from law and education to healthcare. Yet, the phenomenon known as AI hallucinations danger presents one of the most significant risks of adopting these tools without caution. An AI hallucination occurs when a system confidently produces false or fabricated information that appears accurate on the surface. Unlike simple technical glitches, these errors can mislead users into making flawed decisions with real-world consequences.

In healthcare, where accuracy is paramount, AI hallucinations raise urgent concerns. Misinformation could slip into patient records, diagnostic support systems, or compliance documentation, potentially undermining both patient safety and regulatory trust. This article examines what AI hallucinations are, why they happen, examples from real life, the dangers for healthcare, and what organizations can do to mitigate these risks.

Understanding AI Hallucinations

AI hallucinations occur when a large language model (LLM) generates false information that is presented as fact. These outputs often sound convincing, polished, and professional because the system is optimized for linguistic fluency rather than factual correctness. As a result, people unfamiliar with the inner workings of AI often mistake hallucinations for reliable knowledge.

Unlike search engines, which return sourced documents, generative AI models are prediction machines. They assemble sentences by predicting the most statistically likely sequence of words, without any built-in fact-checking mechanism. This design explains why they can create coherent paragraphs that look authoritative yet are entirely fabricated.

For healthcare teams, this means a chatbot summarizing medical research or drafting clinical notes could seamlessly blend accurate details with dangerous errors, all delivered in the same professional tone.

Causes of AI Hallucinations

Why do AI hallucinations happen? The core reason lies in the architecture and training process of large language models. These systems are trained on massive datasets of text pulled from books, articles, websites, and forums. While the data is vast, it contains biases, inaccuracies, and outdated information. Worse, the models lack a mechanism to distinguish fact from fiction.

- Data Quality Issues: Training data often includes both reliable sources and misinformation. The model treats both as equally valid patterns.

- No Built-In Verification: LLMs don’t fact-check; they only generate the most likely next word or phrase. Accuracy is not part of their objective function.

- Pressure for Fluency: AI is rewarded during training for sounding coherent, not for being truthful. This incentive bias favors fluency over correctness.

- Complexity of Human Knowledge: Medicine, law, and compliance involve nuanced details. A model trained broadly may “fill gaps” with plausible fabrications when it lacks exact information.

This combination makes the AI hallucinations danger a systemic issue, not a rare glitch. Even advanced models can fail at basic factual questions. Research cited by Search Engine Journal showed that large AI systems answered fewer than 50% of knowledge-based questions correctly—a sobering reminder of their limitations.

In sectors like healthcare, this is particularly alarming. A 50% accuracy rate is unacceptable when decisions involve patient treatment or regulatory compliance.

Examples of AI Hallucinations

Real-world cases illustrate why AI hallucinations are so dangerous. These examples show how confident yet false outputs can slip past professional scrutiny:

The ChatGPT Legal Case Incident

In 2023, a lawyer submitted legal filings in court that included fabricated case law invented by ChatGPT. The system generated non-existent precedents with realistic formatting, and the lawyer—trusting the AI—incorporated them into his brief. The court sanctioned him once the error was uncovered (Stanford HAI provides detailed analysis of the case). This event highlighted how over-reliance on generative AI can undermine professional credibility and legal processes.

Healthcare Documentation Risks

Imagine an AI transcription tool that mistakenly inserts “penicillin allergy” into a patient record when no such allergy exists. Downstream clinicians relying on that record might alter treatment plans, delaying care or prescribing less effective medication. Such fabricated data in Electronic Health Records (EHRs) is not just an error—it’s a compliance and safety hazard.

General Knowledge Errors

Studies, including those reported by MIT Sloan, show that AI models confidently answer factual questions incorrectly, ranging from historical dates to basic science. These errors are not always obvious, making it easy for users to accept them as truth.

These cases demonstrate how hallucinations move beyond technical quirks to affect real people, organizations, and legal accountability. In healthcare, the stakes are even higher.

Risks of AI Hallucinations in Healthcare

In healthcare, hallucinations have the potential to cause irreversible harm. Unlike entertainment or marketing, where a false AI-generated detail may be inconvenient but not catastrophic, healthcare depends on rigorous truth.

- Patient Safety: Hallucinated data in patient records could lead to misdiagnosis, improper treatment, or adverse drug interactions.

- Diagnostic Errors: AI tools may invent correlations between symptoms and diseases, leading clinicians toward incorrect conclusions.

- Misinformation Spread: Chatbots designed to provide patient advice could unintentionally promote unsafe practices.

- Compliance Breaches: Hallucinations that misrepresent laws like HIPAA or GDPR could result in regulatory non-compliance, fines, or reputational damage.

As NCBI research emphasizes, trust is fundamental in healthcare. If patients or regulators believe that AI systems are unreliable, adoption will stall, no matter how advanced the technology may be.

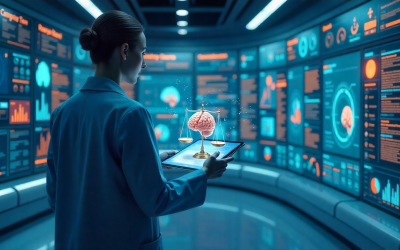

Improving AI Accuracy

The risks of AI hallucinations are serious, but they are not insurmountable. Healthcare organizations can implement safeguards that reduce exposure to misinformation while still leveraging the benefits of generative AI.

- Rigorous Verification: Require human review of AI-generated documentation, especially in high-risk areas like diagnostics and compliance.

- Fact-Checking Layers: Pair generative AI with retrieval-augmented systems that cross-check outputs against verified medical databases.

- Clinical Trials for AI Tools: Insist that healthcare applications undergo evaluation similar to medical devices before deployment.

- Governance Frameworks: Develop internal compliance protocols aligned with NIST AI RMF and OECD AI Principles.

Ultimately, the mantra should be: trust, but verify. By embedding safeguards and human oversight, organizations can benefit from AI efficiency without compromising accuracy or compliance.

“When it comes to AI in healthcare, innovation must never outrun accountability.”

Conclusion

AI hallucinations danger is not hypothetical—it is a present reality with implications across industries. For healthcare, the risk is magnified because errors can directly impact patient lives and regulatory obligations. But with vigilance, structured safeguards, and compliance-oriented governance, organizations can reduce these risks.

Healthcare innovators and compliance officers must balance innovation with responsibility. AI offers enormous potential to streamline workflows, reduce costs, and expand access to care—but only if deployed with a clear understanding of its limitations.

For more practical insights into AI, compliance, and healthcare regulation, explore our educational resources built specifically for healthcare startups, product teams, and compliance officers.

Learn more about AI Compliance

Are you a healthcare professional or innovator trying to navigate the complex world of AI compliance?

Visit our website to learn more:

aihealthcarecompliance.com

Useful Links:

- MIT Sloan: When AI Gets It Wrong – Addressing AI Hallucinations and Bias

- Stanford HAI: AI on Trial – Legal Models Hallucinate in 1 out of 6 (or More) Benchmarking Queries

- Stanford HAI: Understanding Liability Risk from Healthcare AI

- Harvard Misinformation Review: New Sources of Inaccuracy – A Conceptual Framework for Studying AI Hallucinations

- Stanford HAI: 2024 AI Index Report – Responsible AI