Canada

1) Mandatory Compliance for Legacy Systems (Jan 12, 2026)

The Treasury Board of Canada Secretariat has reaffirmed the final deadline for all federal automated decision-making systems (ADMS). Systems developed or procured before June 24, 2025, are no longer exempt and must achieve full compliance with the Directive on Automated Decision-Making by mid-2026. This mandate requires rigorous documentation of client feedback, human-in-the-loop safeguards, and traceability protocols.

Directive on Automated Decision-Making

How it applies to AI in Healthcare:

Federal health tools—including those used by Health Canada and Indigenous Services Canada—must now undergo mandatory Algorithmic Impact Assessments (AIAs). This ensures that AI used for clinical triage or benefits eligibility does not contain hidden biases and remains under human oversight. We can expect simlar requirements being adopted outside the current scope.

2) Mandatory Electronic Regulatory Enrolment (Jan 13, 2026)

Health Canada has officially transitioned to a mandatory Regulatory Enrolment Process (REP) for all Class II, III, and IV medical devices. As of January 13, 2026, the agency has permanently ended email-based submissions, requiring all regulatory transactions to be conducted through the Common Electronic Submission Gateway (CESG).

How it applies to AI in Healthcare:

Manufacturers of AI-enabled Software as a Medical Device (SaMD) must now use structured XML-based templates for filing. This change streamlines the review of AI model updates and ensures that the “lifecycle monitoring” of adaptive algorithms is captured in a standardized digital format.

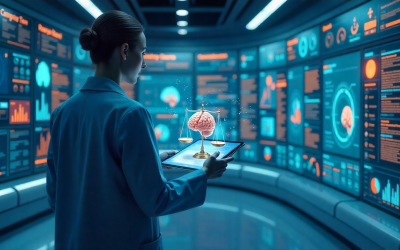

3) Guide on Departmental AI Responsibilities (Jan 16, 2026)

The Treasury Board released a comprehensive governance guide defining the specific roles of Chief Data Officers (CDOs) and Chief Information Officers (CIOs) in AI adoption. The guide mandates that departments implement robust tracking and data integrity protocols, ensuring that “foundational data” is of high quality before AI deployment.

Guide on Departmental AI Responsibilities

How it applies to AI in Healthcare:

The framework identifies priority areas for AI within Health Canada, specifically targeting the use of AI to enhance cancer screening accuracy and optimize personalized drug treatment pathways.

United States

1) FDA Agentic AI Deployment (Jan 12, 2026)

Following the successful 2025 pilot of the “Elsa” assistant, the FDA officially completed the agency-wide rollout of “Agentic AI” capabilities for all employees on January 12, 2026. This deployment moves beyond simple chat interfaces to advanced systems capable of planning and executing multi-step regulatory workflows. To mark the launch, the agency initiated a two-month Agentic AI Challenge for staff to develop custom solutions for scientific computing, with winners to be showcased at the FDA’s Scientific Computing Day.

How it applies to AI in Healthcare:

This technology speeds up the review of new medical products by automating the summarization of adverse event trends and flagging inconsistencies in clinical trial submissions. For healthcare providers, this means a more efficient regulatory body that can identify safety signals faster and reduce the time it takes for breakthrough treatments to reach the market.

2) FDA-EMA Joint Framework for Drug Development (Jan 16, 2026)

On January 14, 2026, the FDA and the European Medicines Agency (EMA) published a landmark joint document: “Guiding Principles of Good AI Practice in Drug Development.” This accord establishes 10 core principles—including human-centric design, GxP-aligned data governance, and lifecycle management—to harmonize how AI is regulated in the pharmaceutical sector across the Atlantic.

Guiding Principles of Good AI Practice

How it applies to AI in Healthcare:

The framework mandates explainable AI, requiring developers to move away from “black box” models so that clinical decisions are transparent and traceable. This standardization helps accelerate drug discovery, reduces the need for animal testing through digital simulations, and ensures that AI tools used in patient care are continuously monitored for accuracy as medical data evolves.

Cross-Cutting Themes

The recent mandates from the Treasury Board of Canada, Health Canada, and the FDA/EMA joint framework reveal four critical shifts in the regulatory landscape:

-

The End of the “Legacy” Free Pass: Regulations are no longer just for new products. Canada’s mandate for legacy systems (ADMS) signifies that any AI tool currently in use must be retroactively audited and brought up to modern standards or decommissioned.

-

From “Black Box” to “Open Glass”: There is a global push against opaque algorithms. The FDA-EMA framework and Canada’s Directive on ADMS both demand explainability and traceability. AI must not only work; it must be able to “show its work” to a human auditor or clinician.

-

Structured Lifecycle Accountability: Compliance is shifting from a one-time “stamp of approval” to continuous monitoring. The use of XML-based templates in Canada’s REP and the FDA’s “Agentic AI” for safety signal detection mean that regulators now expect real-time data on model drift and performance.

-

Strategic Governance Roles: Compliance is moving from the IT basement to the C-Suite. The explicit definition of CIO and CDO roles ensures that data integrity and AI ethics are tied to organizational leadership and liability.

Immediate, concrete checklist for health organisations & vendors

1. Inventory & Impact Assessment

[ ] Legacy Audit: Identify every AI or automated decision-making system (ADMS) procured before June 2025.

[ ] Algorithmic Impact Assessment (AIA): Complete and publish AIAs for all clinical triage or benefit eligibility tools (Mandatory for Canadian federal partners; recommended for private entities to align with future provincial/state laws).

[ ] Risk Tiering: Classify each tool (Class II, III, IV for Canada; High-risk vs. Low-risk for US/EU) to determine the depth of documentation required.

2. Regulatory & Technical Transition

[ ] REP & CESG Migration: (For Vendors/Manufacturers) Ensure all Class II-IV medical device filings are transitioned from email to the Common Electronic Submission Gateway (CESG) using XML templates.

[ ] Agentic AI Preparedness: Ensure internal data sets are “clean” and high-quality, as FDA’s new agentic systems will more aggressively flag inconsistencies in clinical trial submissions.

[ ] Explainability Protocol: Review model architectures. If a model is “black box,” implement post-hoc explanation tools (e.g., LIME or SHAP) to meet FDA-EMA transparency principles.

3. Human-Centric Safeguards

[ ] Human-in-the-Loop (HITL): Document the specific point where a human clinician reviews and overrides/validates an AI decision (e.g., in cancer screening or drug treatment pathways).

[ ] Bias & Traceability Logs: Establish “traceability protocols” that log the data provenance and the “foundational data” quality checks used during model training.

[ ] Client Feedback Loop: Implement a mandatory mechanism for collecting and documenting user/patient feedback on AI performance as required by the Canadian Directive.

4. Governance & Training

[ ] Appoint AI Leads: Formally designate the roles of Chief Data Officer (CDO) and Chief Information Officer (CIO) in relation to AI oversight.

[ ] GxP Alignment: Align data governance with “Good Practice” (GxP) standards, ensuring all AI-related data is verifiable and permanent.

[ ] Agentic AI Literacy: Train regulatory and scientific staff on how to interact with agentic workflows (like the FDA’s Elsa) to ensure submission efficiency.

Disclaimer: This checklist is provided for general informational purposes only and does not constitute legal, regulatory, or professional advice; organizations should consult with their legal and compliance departments to ensure adherence to specific jurisdictional requirements.

Sources

- Mandatory Compliance for Legacy Systems January 12, 2026

https://www.tbs-sct.canada.ca/pol/doc-eng.aspx?id=32592 - Mandatory Electronic Regulatory Enrolment January 13, 2026

https://www.canada.ca/en/health-canada/services/drugs-health-products/drug-products/applications-submissions/guidance-documents/regulatory-enrolment-process.html - Guide on Departmental AI Responsibilities January 16, 2026

https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/guide-departmental-ai-responsibilities.html - FDA Agentic AI Deployment January 12, 2026

https://www.fda.gov/news-events/press-announcements/fda-expands-artificial-intelligence-capabilities-agentic-ai-deployment - FDA-EMA Joint Framework for Drug Development January 16, 2026

https://www.ema.europa.eu/en/news/ema-fda-set-common-principles-ai-medicine-development-0